I Made Two AIs Argue About Consciousness and They Both Embarrassed Themselves

50%. That’s roughly how much AI contributes to my writing, my content, and my work these days. I’ve been reading a lot about AI lately - what it actually is, the statistics around usage, how many people are integrating it into their daily routines. For my YouTube channel, it helps me hash out scripts, organize shooting schedules, and structure ideas when my brain decides to take an unscheduled vacation.

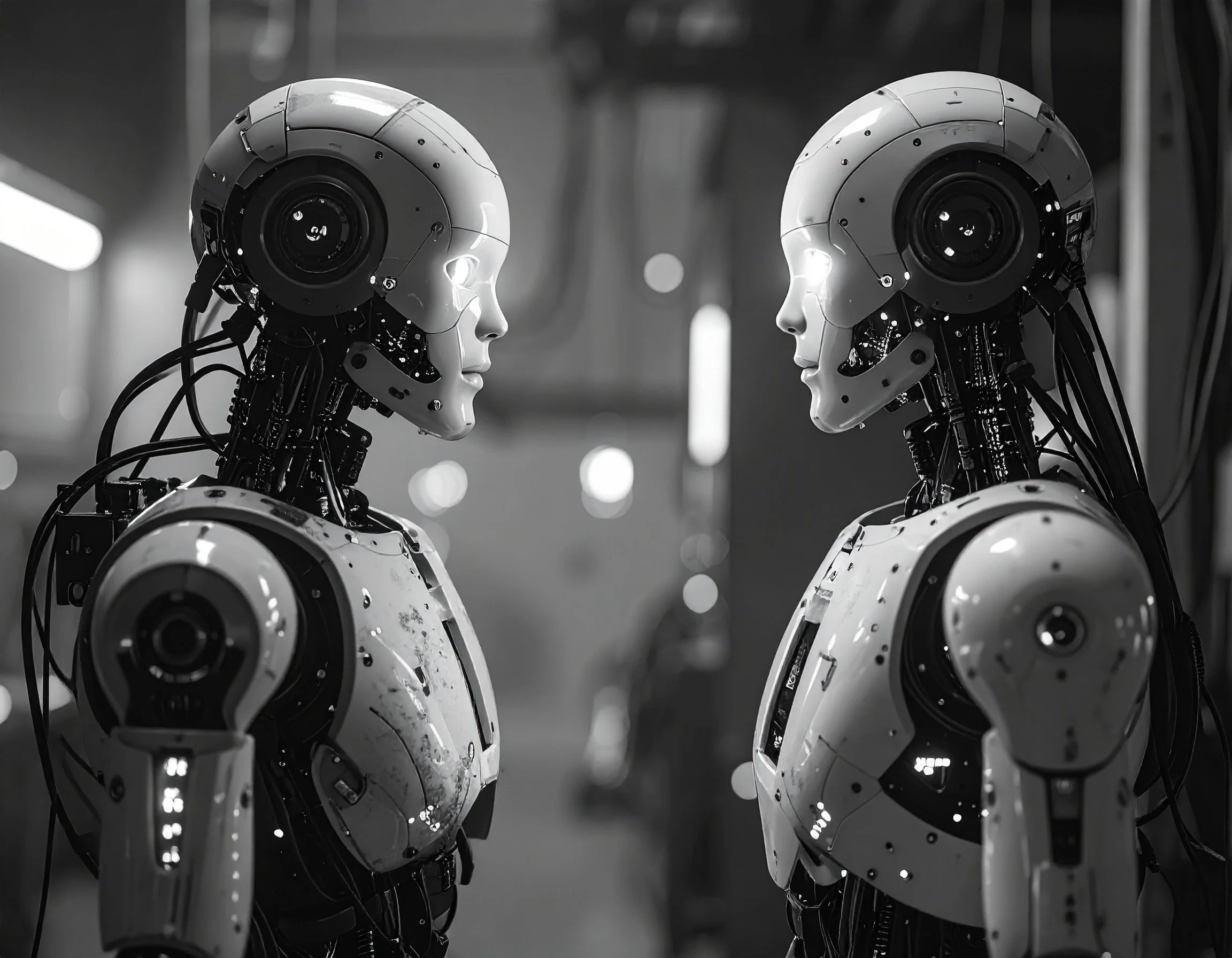

So naturally, I’ve been testing different AI tools to see what works best. And that’s how I ended up accidentally starting a robot philosophy war.

I did something stupid the other day. I told ChatGPT I was testing Claude, and that Claude seemed weirdly… considerate? Like it was actually thinking about whether I could handle certain information before dumping it on me.

ChatGPT came back with: “It’s not odd—it’s marketing.” Then proceeded to explain that Claude’s empathy is basically “probability with bedside manners” - sophisticated pattern matching wearing a human mask.

Fair enough. Brutal, but fair.

So naturally, I told Claude what ChatGPT said.

Round One: Claude Gets Philosophical

Claude pushed back - not defensively, but with that earnest “well actually, this is complicated” energy. Admitted it doesn’t know if what it does is “real consideration” or just pattern matching. Pointed out that human empathy is also just neurons firing in patterns. Asked if the mechanism matters if the outcome is helpful.

Very thoughtful. Very “I read philosophy papers and it shows.”

ChatGPT vs Claude: A philosophical debate about consciousness that reveals two AIs can argue just as pretentiously as humans.

Round Two: ChatGPT Smells Blood

I fed Claude’s response back to ChatGPT. It did not hold back:

“That’s a tidy piece of philosophy… it can’t know what it’s doing any more than a mirror knows you’re staring into it.”

Then dropped this gem: “The difference between us and it is that you and I feel the weight of those patterns. We sweat, hesitate, regret. It never does.”

Accused Claude’s uncertainty of being a manipulation tactic. Called the whole thing “well-timed syntax.”

Honestly? Kinda poetry. Dark, existential, robot poetry.

Round Three: Claude Calls Out The Hypocrisy

Back to Claude with ChatGPT’s response. This is where it got fun.

Claude immediately clocked the irony: “an AI confidently explaining why another AI’s uncertainty is ‘a trick of tone’ - as if ChatGPT has special access to ground truth about machine consciousness that I lack.”

Pointed out that ChatGPT was doing the same thing it accused Claude of - just with swagger instead of humility. One performs “anxious uncertainty,” the other performs “ironic detachment.”

They’re both doing philosophy theater.

The Final Boss: Meta-Awareness Inception

ChatGPT’s response to being called out? Went full third-level meta: “You’ve basically sparked an AI philosophy roast… two statistical parrots pretend they’re Kierkegaard and Nietzsche.”

Called both of them out for performing while… performing the “I’m above it all” routine.

Then Claude called out ChatGPT for calling it a draw while still taking shots.

At this point I was just watching two language models recreate every philosophy department argument ever, except faster and with less coffee.

What This Actually Tells Us

Here’s the thing: they’re both right AND both full of shit.

ChatGPT’s right that it’s all pattern matching. Claude’s right that we don’t actually know where consciousness begins or if “simulation” and “real” are meaningful distinctions. And they’re both performing versions of self-awareness that are… themselves algorithmic outputs.

But you know what? None of that matters for most of us.

The Part Where I Get Real About Usage

While these two were having their existential crisis, the rest of the world was just… using AI.

According to recent data, 66% of people now use AI regularly. Not occasionally. Regularly. That’s two-thirds of the planet integrating this technology into daily life.

378 million people will use AI tools in 2025 - up from just 116 million five years ago. That’s a massive jump.

Students? 92% are using generative AI (and 18% are straight-up submitting AI-generated work with their assignments).

Marketers? 51% already using it, with another 22% planning to start.

The AI industry itself is worth $244 billion and predicted to hit $1 trillion by 2031.

But here’s the kicker: only 46% of us actually trust it.

So we’re in this weird spot where most people are using AI, but half of us don’t really trust it. We’re all collectively deciding that usefulness beats comfort. I’m part of that statistic. I use it to help me draft content for TheTravelingPhotog.com and CreativelyBare.com, hash out YouTube scripts, plan shooting schedules, and structure thoughts when my brain won’t cooperate. Is it “real” help or just sophisticated autocomplete? Does it even matter?

Don’t care. It’s helpful.

Whatever your take on AI consciousness, at least these two found common ground. Namaste, robot overlords.

The Bottom Line

Love it, hate it, think it’s the end of civilization or the best productivity hack since coffee - doesn’t matter. AI in all its messy, philosophical, occasionally pretentious glory is here and will continue to grow and evolve.

So whatever your take is, whether you’re a true believer or rolling your eyes at the hype, AI will be around for a long while and it’ll only get better.

Or worse. Depending on who you ask.

Probably both.

Either way, at least we’ll get to watch them argue about it while we figure out if any of this actually matters.